When it comes to actually storing the numerical weights that power a large language model's underlying neural network, most modern AI models rely on the precision of 16- or 32-bit floating point numbers. But that level of precision can come at the cost of large memory footprints (in the hundreds of gigabytes for the largest models) and significant processing resources needed for the complex matrix multiplication used when responding to prompts.

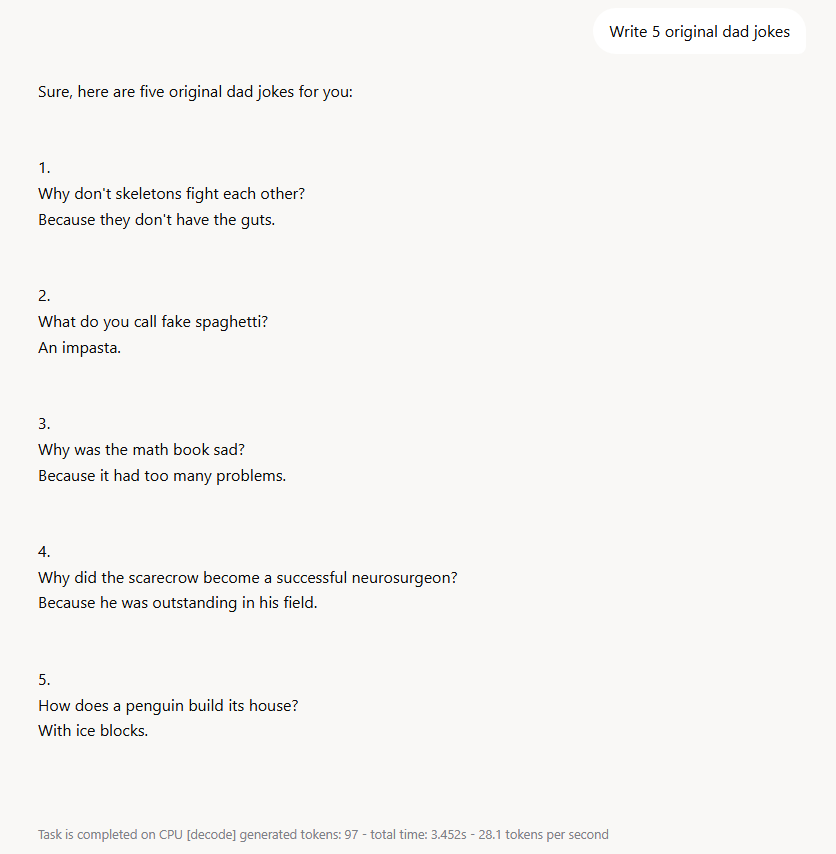

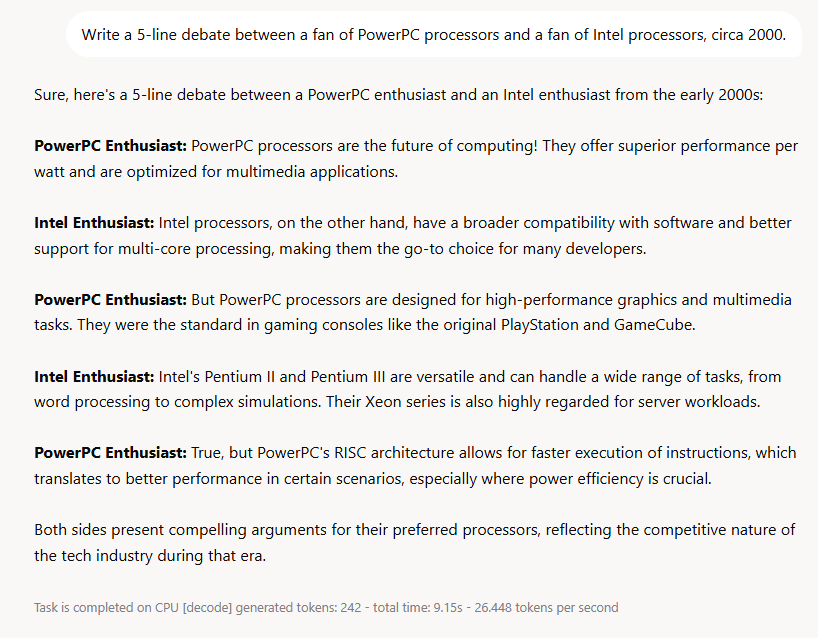

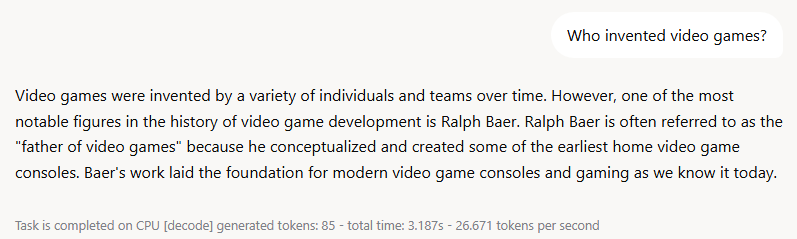

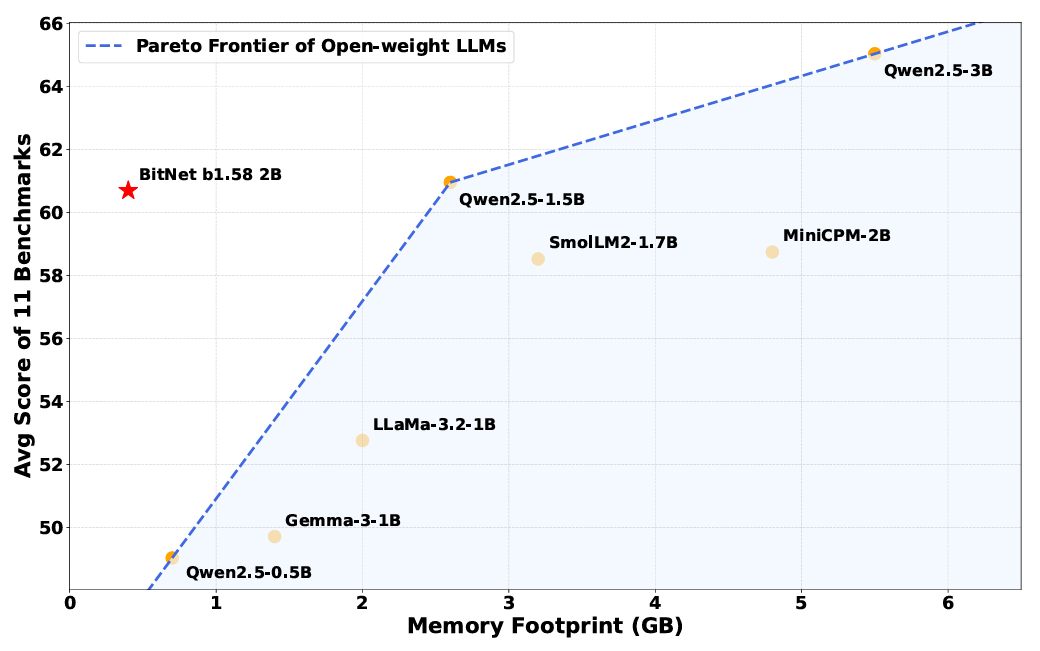

Now, researchers at Microsoft's General Artificial Intelligence group have released a new neural network model that works with just three distinct weight values: -1, 0, or 1. Building on top of previous work Microsoft Research published in 2023, the new model's "ternary" architecture reduces overall complexity and "substantial advantages in computational efficiency," the researchers write, allowing it to run effectively on a simple desktop CPU. And despite the massive reduction in weight precision, the researchers claim that the model "can achieve performance comparable to leading open-weight, full-precision models of similar size across a wide range of tasks."

Watching your weights

The idea of simplifying model weights isn't a completely new one in AI research. For years, researchers have been experimenting with quantization techniques that squeeze their neural network weights into smaller memory envelopes. In recent years, the most extreme quantization efforts have focused on so-called "BitNets" that represent each weight in a single bit (representing +1 or -1).

The new BitNet b1.58b model doesn't go quite that far—the ternary system is referred to as "1.58-bit," since that's the average number of bits needed to represent three values (log(3)/log(2)). But it sets itself apart from previous research by being "the first open-source, native 1-bit LLM trained at scale," resulting in a 2 billion token model based on a training dataset of 4 trillion tokens, the researchers write.

The "native" bit is key there, since many previous quantization efforts simply attempted after-the-fact size reductions on pre-existing models trained at "full precision" using those large floating-point values. That kind of post-training quantization can lead to "significant performance degradation" compared to the models they're based on, the researchers write. Other natively trained BitNet models, meanwhile, have been at smaller scales that "may not yet match the capabilities of larger, full-precision counterparts," they write.

Loading comments...

Loading comments...