2025-07-11

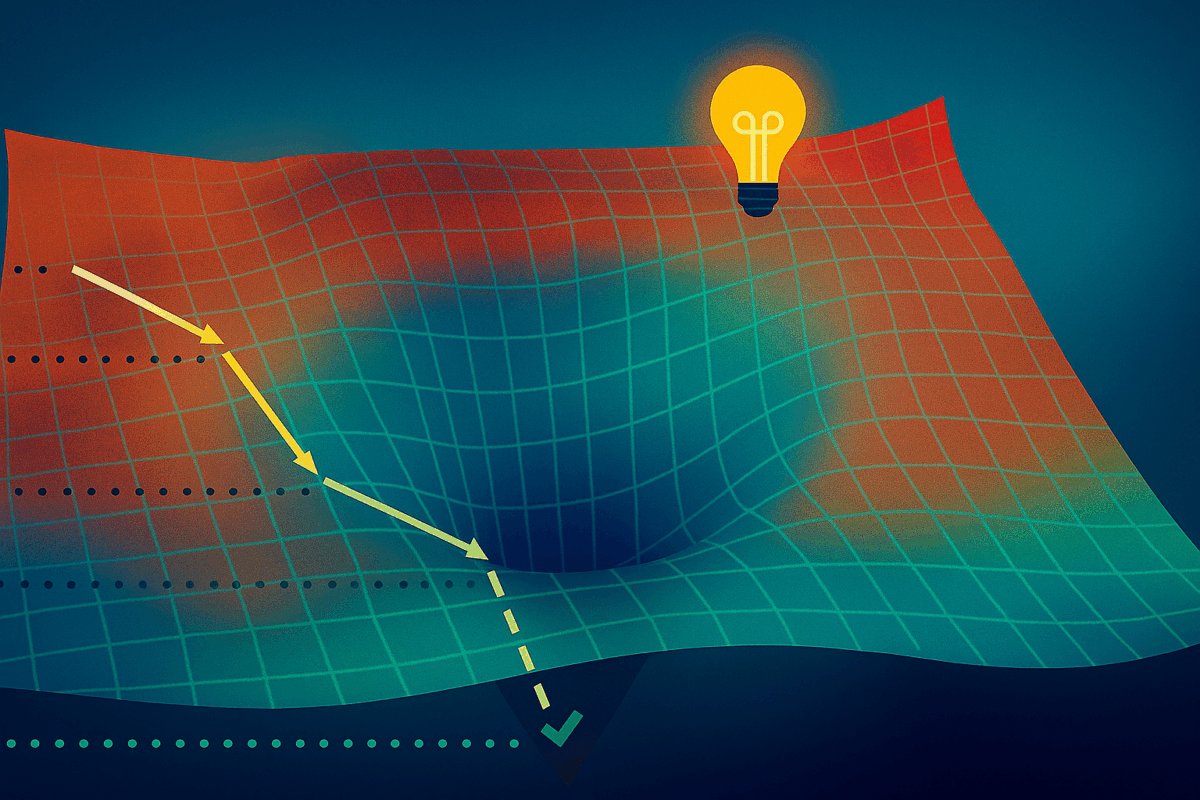

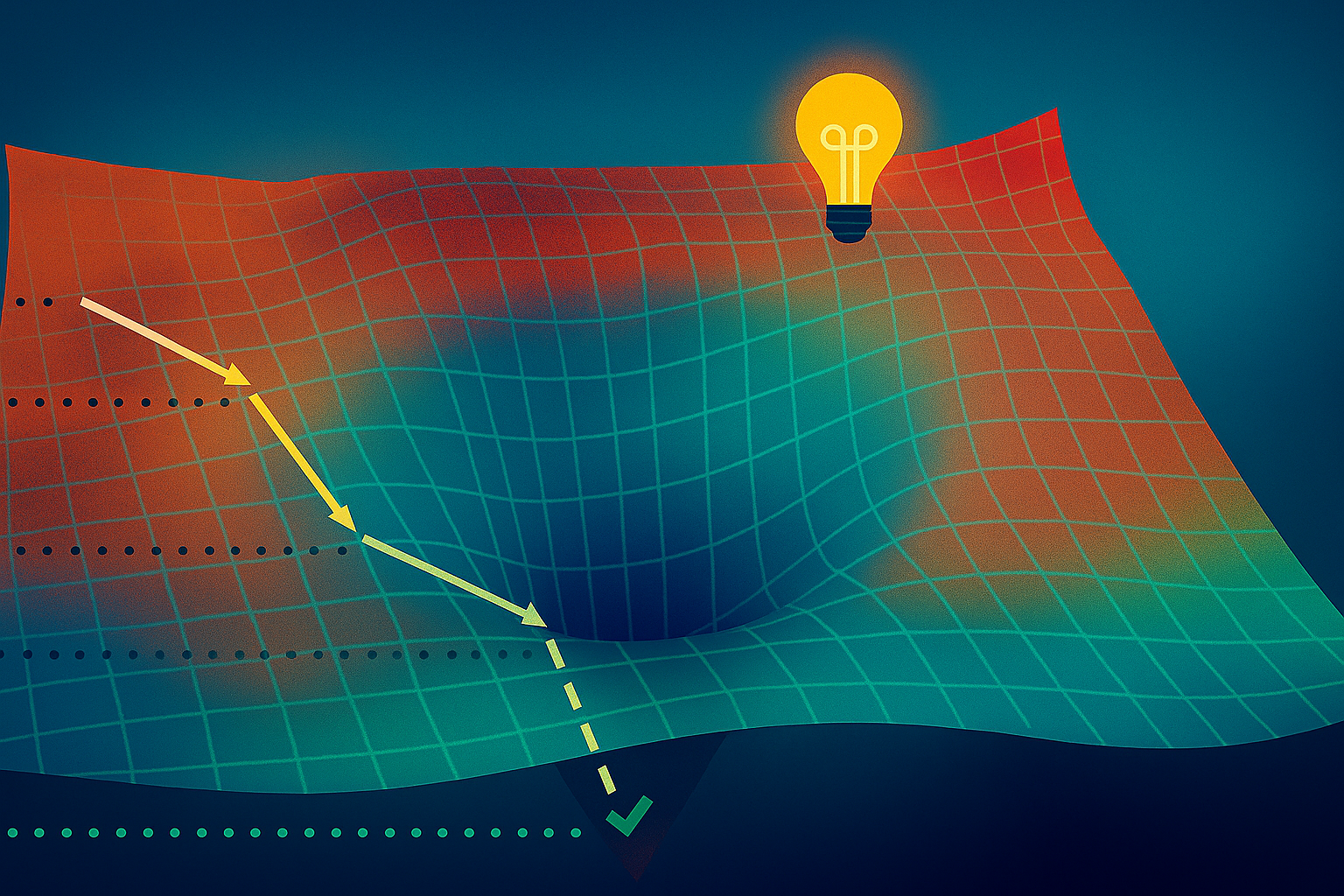

New Energy-Based Transformer architecture aims to bring better "System 2 thinking" to AI models

A new architecture called Energy-Based Transformer is designed to teach AI models to solve problems analytically and step by step.

The article New Energy-Based Transformer architecture aims to bring better "System 2 thinking" to AI models appeared first on THE DECODER.

[...]Rating

Innovation

Pricing

Technology

Usability

We have discovered similar tools to what you are looking for. Check out our suggestions for similar AI tools.

2025-11-04

Attention ISN'T all you need?! New Qwen3 variant Brumby-14B-Base leverages Power Retention technique

When the transformer architecture was introduced in 2017 in the now seminal Google paper "Attention Is All You Need," it became an instant cornerstone of modern artificial intelligence. Ever [...]

2025-12-12

In 2025, AI and EVs gave the US an insatiable hunger for power

You may be surprised to learn electricity only accounts for 21 percent of the world’s energy consumption. Fossil fuels and the rest all play their part to make the world go around, but their role is [...]

2025-10-02

'Western Qwen': IBM wows with Granite 4 LLM launch and hybrid Mamba/Transformer architecture

IBM today announced the release of Granite 4.0, the newest generation of its homemade family of open source large language models (LLMs) designed to balance high performance with lower memory and cost [...]

2025-10-28

IBM's open source Granite 4.0 Nano AI models are small enough to run locally directly in your browser

In an industry where model size is often seen as a proxy for intelligence, IBM is charting a different course — one that values efficiency over enormity, and accessibility over abstraction.The 114-y [...]

2025-10-23

Sakana AI's CTO says he's 'absolutely sick' of transformers, the tech that powers every major AI model

In a striking act of self-critique, one of the architects of the transformer technology that powers ChatGPT, Claude, and virtually every major AI system told an audience of industry leaders this week [...]

2025-12-15

Nvidia debuts Nemotron 3 with hybrid MoE and Mamba-Transformer to drive efficient agentic AI

Nvidia launched the new version of its frontier models, Nemotron 3, by leaning in on a model architecture that the world’s most valuable company said offers more accuracy and reliability for agents. [...]

2025-11-03

The beginning of the end of the transformer era? Neuro-symbolic AI startup AUI announces new funding at $750M valuation

The buzzed-about but still stealthy New York City startup Augmented Intelligence Inc (AUI), which seeks to go beyond the popular "transformer" architecture used by most of today's LLMs [...]

2025-11-21

Google’s ‘Nested Learning’ paradigm could solve AI's memory and continual learning problem

Researchers at Google have developed a new AI paradigm aimed at solving one of the biggest limitations in today’s large language models: their inability to learn or update their knowledge after trai [...]