2025-07-21

Yet another study finds that overloading LLMs with information leads to worse results

Large language models are supposed to handle millions of tokens - the fragments of words and characters that make up their inputs - at once. But the longer the context, the worse their performance gets.

The article Yet another study finds that overloading LLMs with information leads to worse results appeared first on THE DECODER.

[...]Rating

Innovation

Pricing

Technology

Usability

We have discovered similar tools to what you are looking for. Check out our suggestions for similar AI tools.

2025-10-16

ACE prevents context collapse with ‘evolving playbooks’ for self-improving AI agents

A new framework from Stanford University and SambaNova addresses a critical challenge in building robust AI agents: context engineering. Called Agentic Context Engineering (ACE), the framework automat [...]

2025-11-23

Lean4: How the theorem prover works and why it's the new competitive edge in AI

Large language models (LLMs) have astounded the world with their capabilities, yet they remain plagued by unpredictability and hallucinations – confidently outputting incorrect information. In high- [...]

2025-09-01

LLMs struggle with clinical reasoning and are just matching patterns, study finds

A new study in JAMA Network Open raises fresh doubts about whether large language models (LLMs) can actually reason through medical cases or if they're just matching patterns they've seen be [...]

2025-04-22

So-called reasoning models are more efficient but not more capable than regular LLMs, study finds

A new study from Tsinghua University and Shanghai Jiao Tong University examines whether reinforcement learning with verifiable rewards (RLVR) helps large language models reason better—or simply make [...]

2025-10-13

Self-improving language models are becoming reality with MIT's updated SEAL technique

Researchers at the Massachusetts Institute of Technology (MIT) are gaining renewed attention for developing and open sourcing a technique that allows large language models (LLMs) — like those underp [...]

2025-06-07

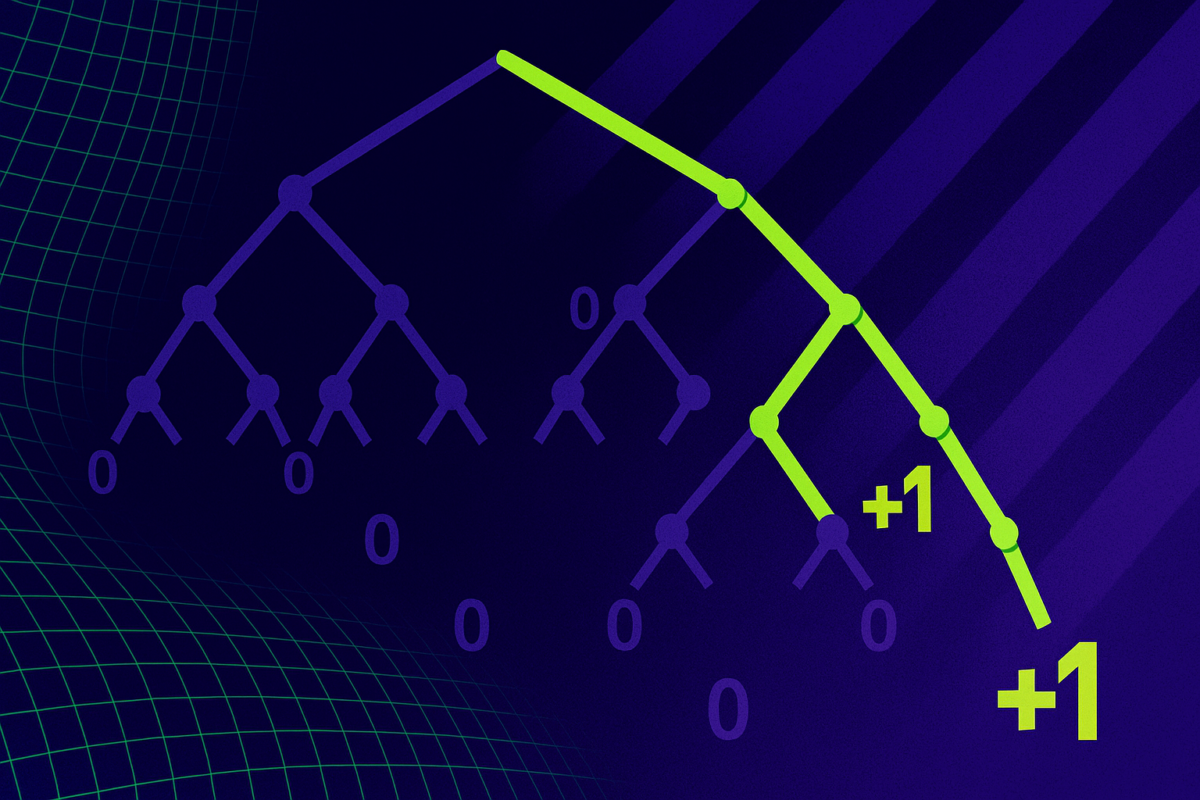

Apple study finds "a fundamental scaling limitation" in reasoning models' thinking abilities

LLMs designed for reasoning, like Claude 3.7 and Deepseek-R1, are supposed to excel at complex problem-solving by simulating thought processes. But a new study by Apple researchers suggests that these [...]

2025-08-13

Norton VPN review: A VPN that fails to meet Norton's standards

One thing I need to make clear right from the start: this is a review of Norton VPN (formerly Norton Secure VPN, and briefly Norton Ultra VPN) as a standalone app, not of the VPN feature in the Norton [...]

2025-06-06

How to trace a picture's origin with reverse image search

Reverse image searching is a quick and easy way to trace the origin of an image, identify objects or landmarks, find higher-resolution alternatives or check if a photo has been altered or used elsewhe [...]