2025-05-04

Transformer models show surprising parallels to human thinking, study finds

A new study links layer-time dynamics in Transformer models with real-time human processing. The findings suggest that AI models may not only reach similar outputs as humans but could also follow similar "processing strategies" along the way.

The article Transformer models show surprising parallels to human thinking, study finds app [...]

Rating

Innovation

Pricing

Technology

Usability

We have discovered similar tools to what you are looking for. Check out our suggestions for similar AI tools.

2025-11-04

Attention ISN'T all you need?! New Qwen3 variant Brumby-14B-Base leverages Power Retention technique

When the transformer architecture was introduced in 2017 in the now seminal Google paper "Attention Is All You Need," it became an instant cornerstone of modern artificial intelligence. Ever [...]

2025-10-02

'Western Qwen': IBM wows with Granite 4 LLM launch and hybrid Mamba/Transformer architecture

IBM today announced the release of Granite 4.0, the newest generation of its homemade family of open source large language models (LLMs) designed to balance high performance with lower memory and cost [...]

2025-11-06

Moonshot's Kimi K2 Thinking emerges as leading open source AI, outperforming GPT-5, Claude Sonnet 4.5 on key benchmarks

Even as concern and skepticism grows over U.S. AI startup OpenAI's buildout strategy and high spending commitments, Chinese open source AI providers are escalating their competition and one has e [...]

2025-10-28

IBM's open source Granite 4.0 Nano AI models are small enough to run locally directly in your browser

In an industry where model size is often seen as a proxy for intelligence, IBM is charting a different course — one that values efficiency over enormity, and accessibility over abstraction.The 114-y [...]

2025-11-13

Upwork study shows AI agents excel with human partners but fail independently

Artificial intelligence agents powered by the world's most advanced language models routinely fail to complete even straightforward professional tasks on their own, according to groundbreaking re [...]

2025-11-12

Baidu just dropped an open-source multimodal AI that it claims beats GPT-5 and Gemini

Baidu Inc., China's largest search engine company, released a new artificial intelligence model on Monday that its developers claim outperforms competitors from Google and OpenAI on several visio [...]

2025-10-23

Sakana AI's CTO says he's 'absolutely sick' of transformers, the tech that powers every major AI model

In a striking act of self-critique, one of the architects of the transformer technology that powers ChatGPT, Claude, and virtually every major AI system told an audience of industry leaders this week [...]

2025-07-11

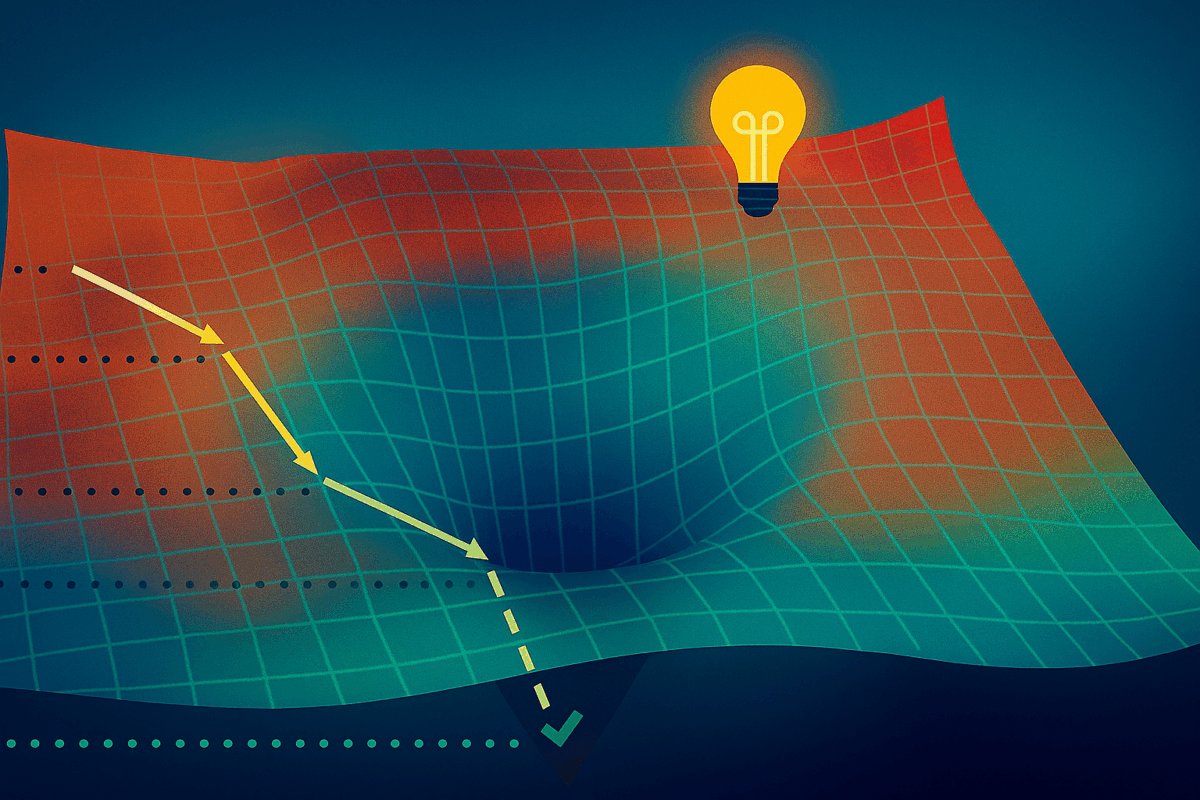

New Energy-Based Transformer architecture aims to bring better "System 2 thinking" to AI models

A new architecture called Energy-Based Transformer is designed to teach AI models to solve problems analytically and step by step.<br /> The article New Energy-Based Transformer architecture aim [...]